What is Gemini?

Gemini is a series of multimodal generative AI models developed by Google. Multimodal generative AI models are models that combine types of inputs, such as images, videos, audio, and text provided as a prompt. Gemini models can accept text and images in prompts, depending on the model variation you choose, and output text responses.

Gemini 1.0 is introduced in three different sizes

Gemini Ultra - Gemini Ultra is still under development and is said to be Google's largest and most capable model for highly complex tasks.

Gemini Pro - Google has introduced two versions of Gemini Pro, gemini-pro and gemini-pro-vision. Gemini-pro takes input as text and generates output as text. Gemini-pro-vision takes images and text as input and generates output as text.

Gemini Nano - this is the most efficient model for on-device tasks and can be executed on capable Android devices (currently Pixel 8 Pro).

How does Gemini Nano work?

On-Device execution of these models has a lot of benefits. It helps with the processing of sensitive data. It provides offline access (without internet) to the models and also provides cost savings as the model is already installed in the core of the operating system. Integrating AI's capability into Android's layer has a lot of potential. Let's understand how it works.

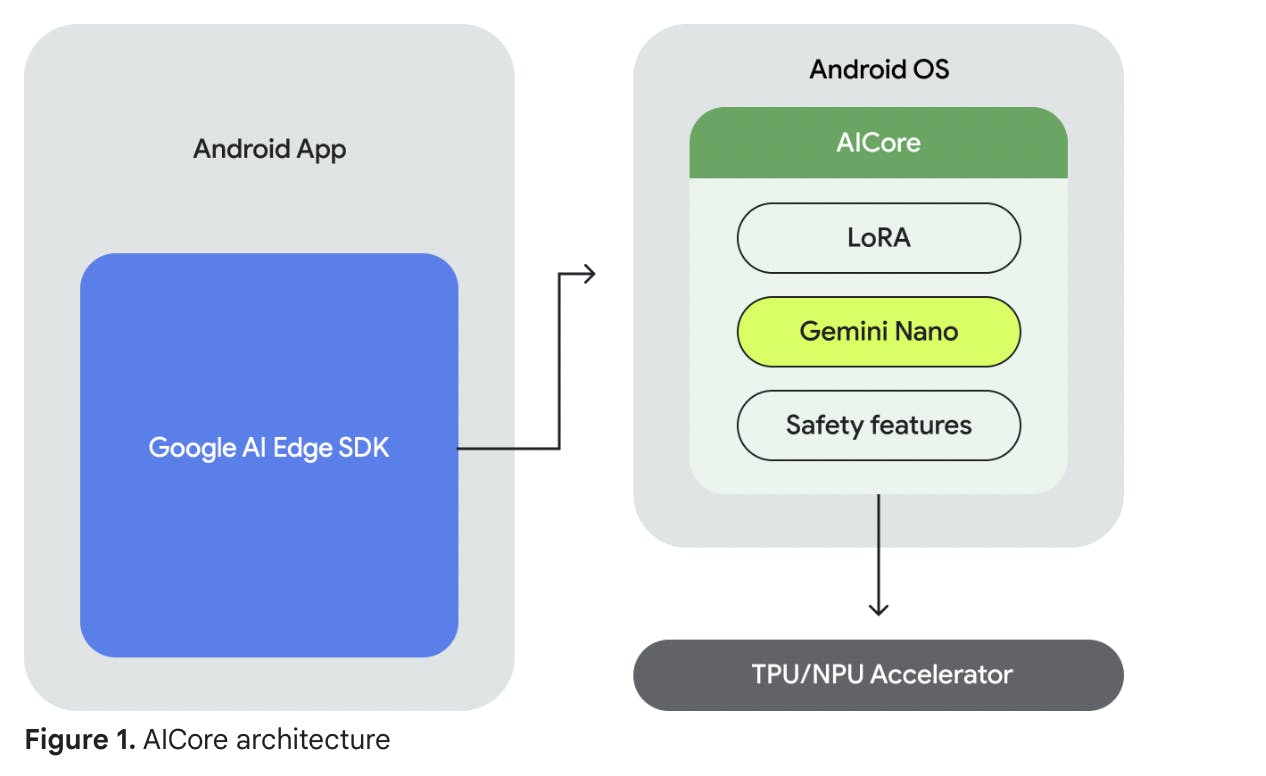

Android AI core provides access to the foundation models that can run on device. To access this model you need Google AI Edge SDK which provides API to access the Gemini Nano model. You can also fine-tune these models using LoRA (Low-rank adaptation). LoRA is a very efficient fine-tuning method, the key idea behind LoRA is to update only a small part of the model's weights, specifically targeting those that have the most significant impact on the task at hand.

AICore is currently available on Pixel 8 Pro devices and a few apps are already using Gemini Nano through AI Core such as Pixel voice recorder and Gboard.

In conclusion, the Gemini series of multimodal generative AI models developed by Google has a lot of potential. With the ability to accept various types of inputs and generate responses, Gemini models can be used in a variety of applications. Additionally, the on-device execution of Gemini Nano provides a lot of benefits, including offline access, cost savings, and processing of sensitive data. With the AI Core currently available on Pixel 8 Pro devices and a few apps already using Gemini Nano through AI Core, it will be interesting to see how this technology will develop in the future.